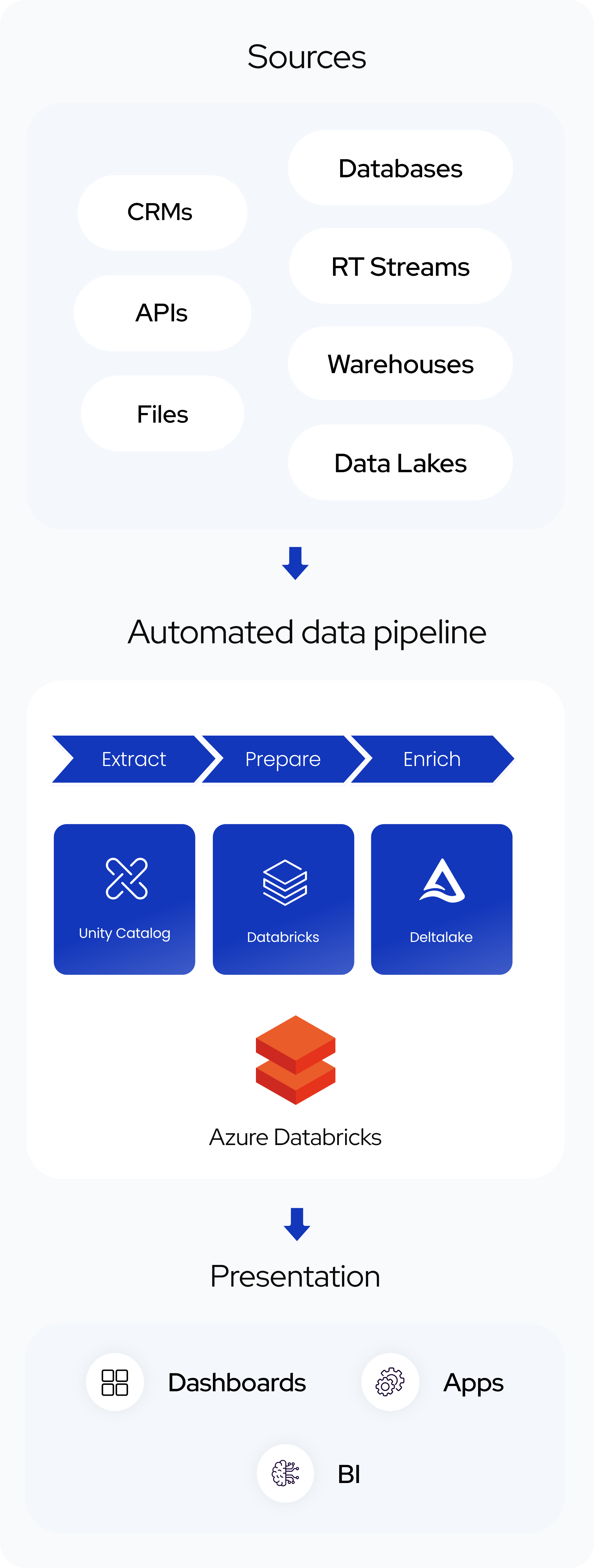

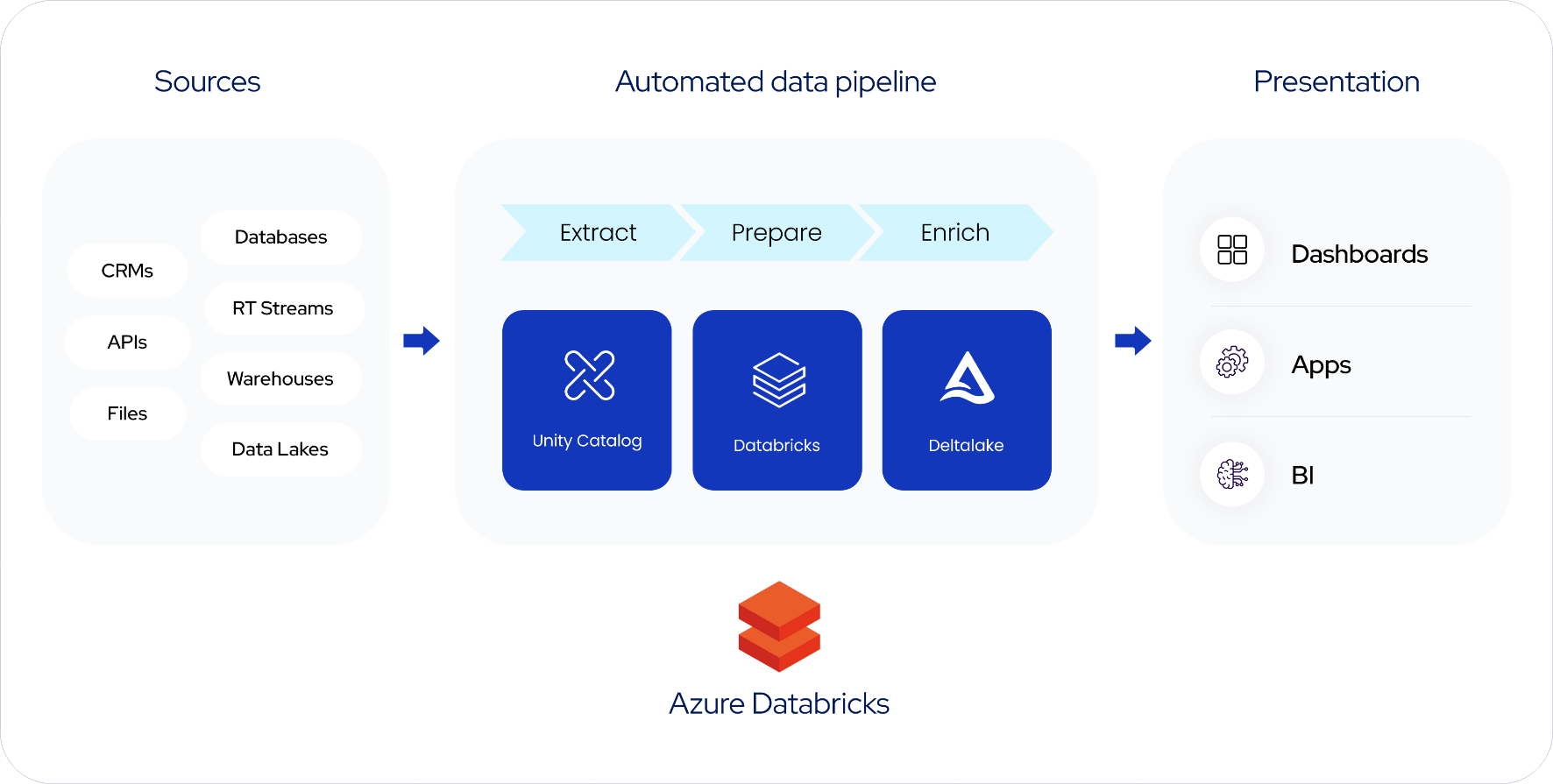

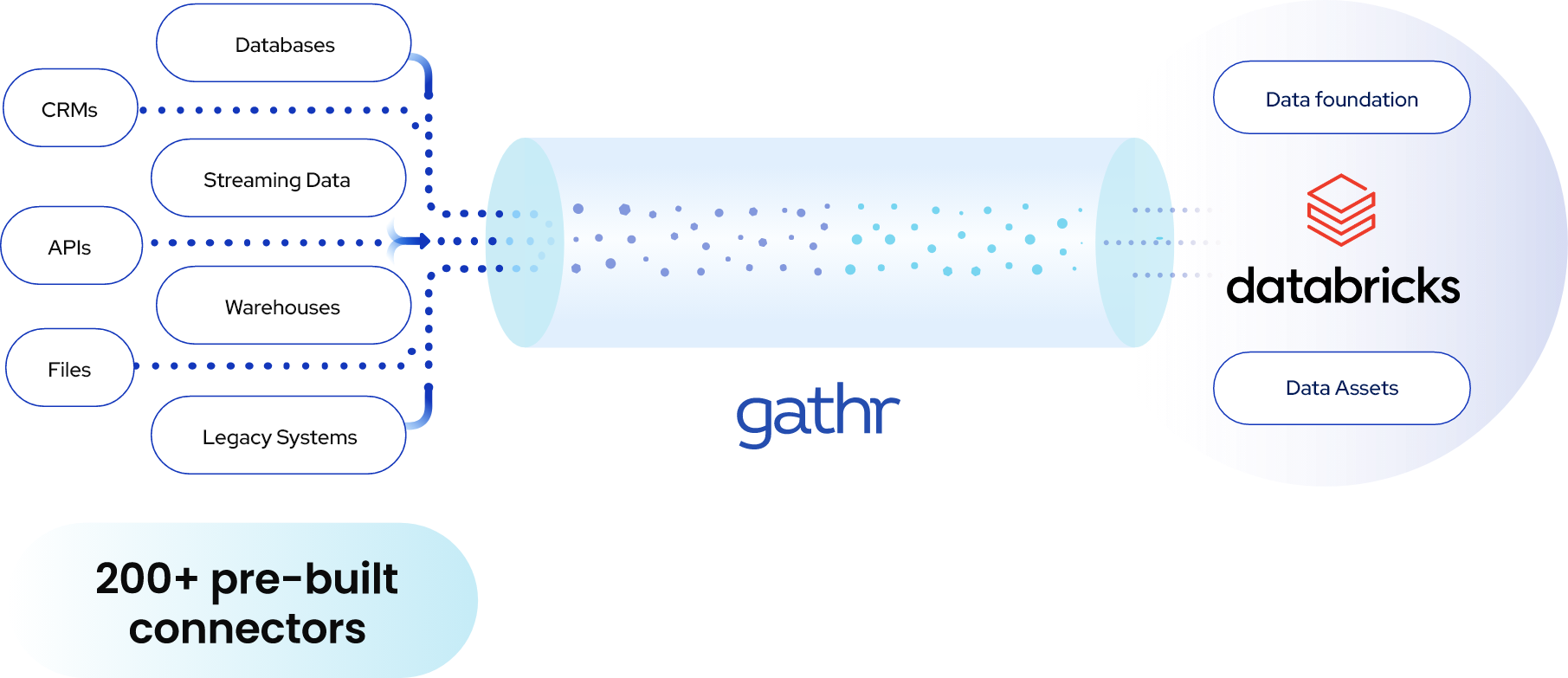

Easily bring all your data into Databricks

Data Foundation - Set the foundation for data analytics, ML, GenAI by ingesting quality data into Databricks Delta Lake.

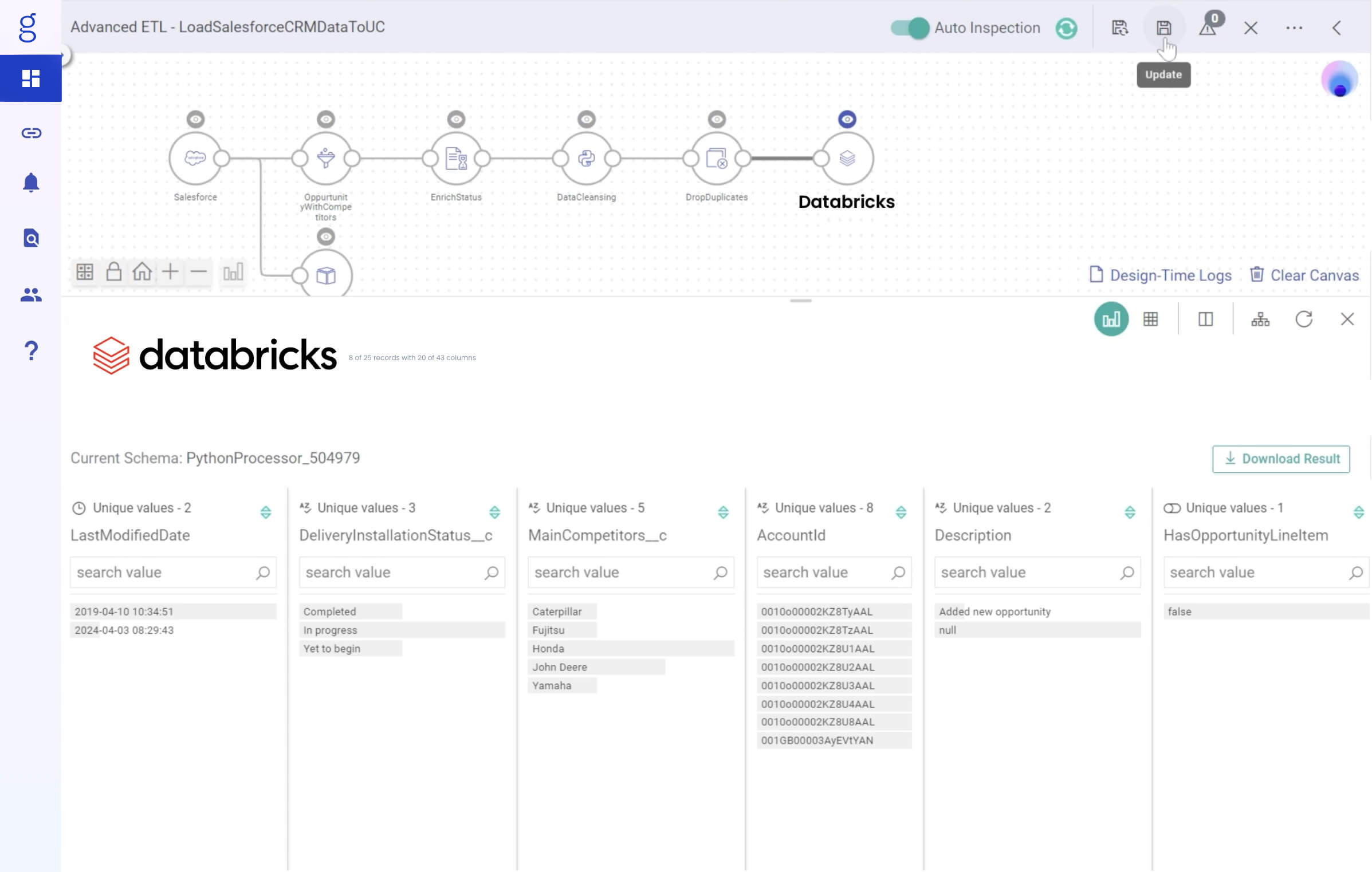

Data Assets - Create a unified repository for storing and accessing diverse datasets with Gathr Ingestion and ETL applications.

Data Pipelines - Streamlining data transformation and integration for realtime and batch sources maintaining quality and consistency of data in databricks.

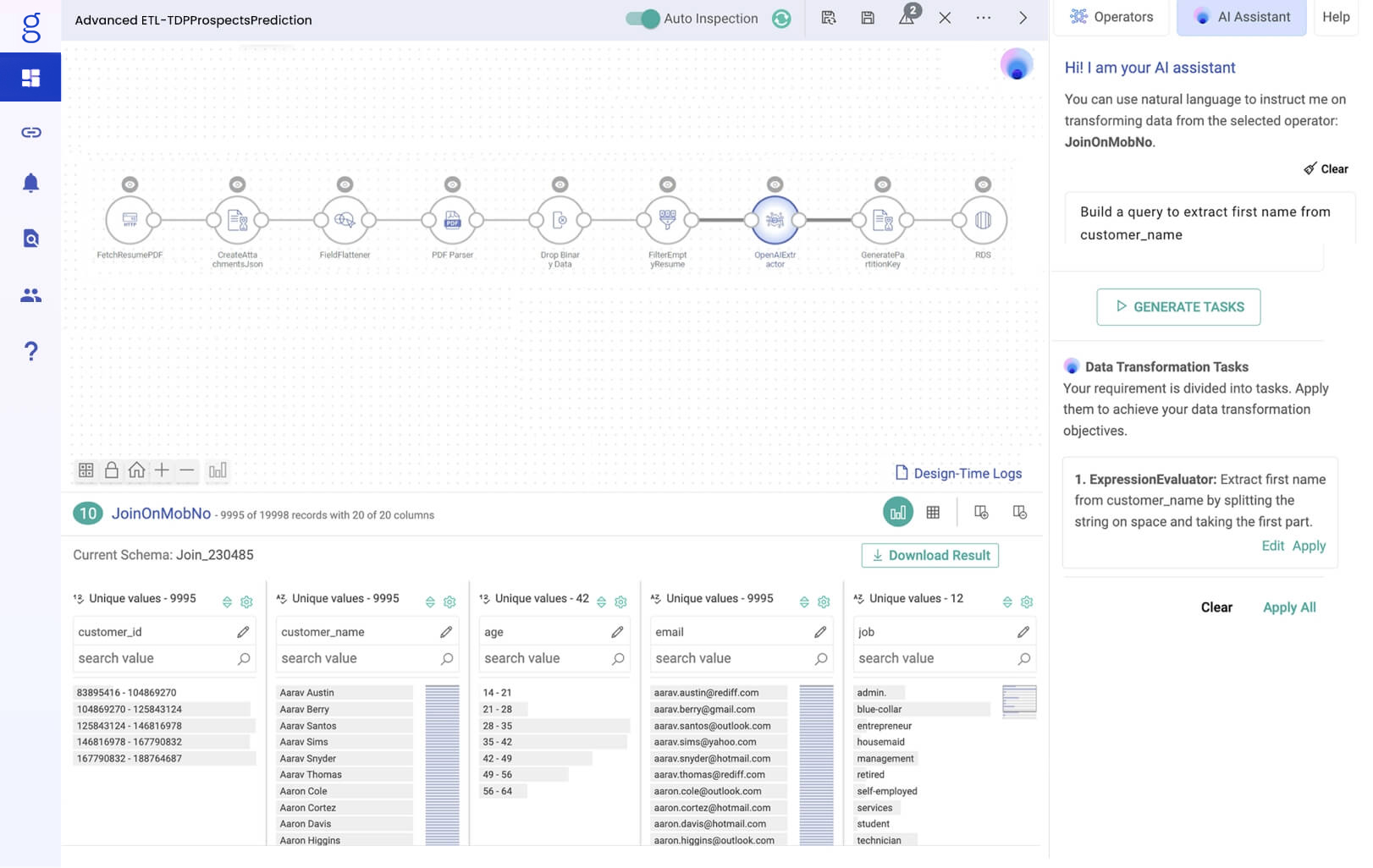

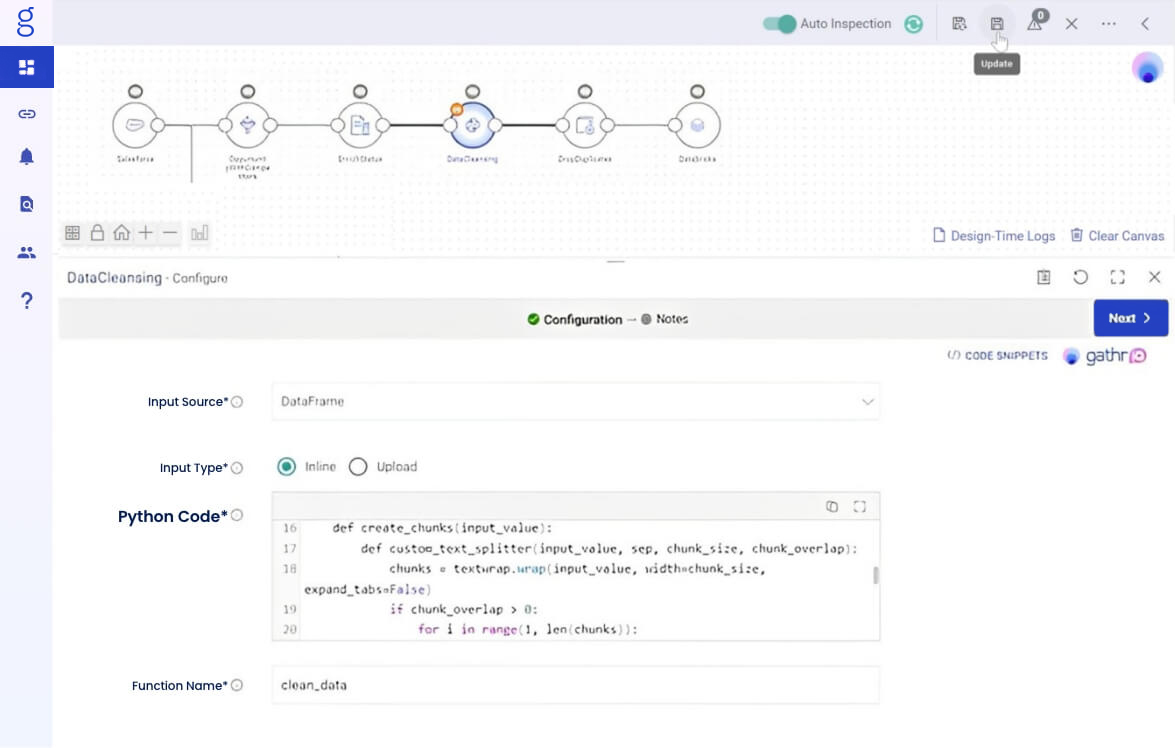

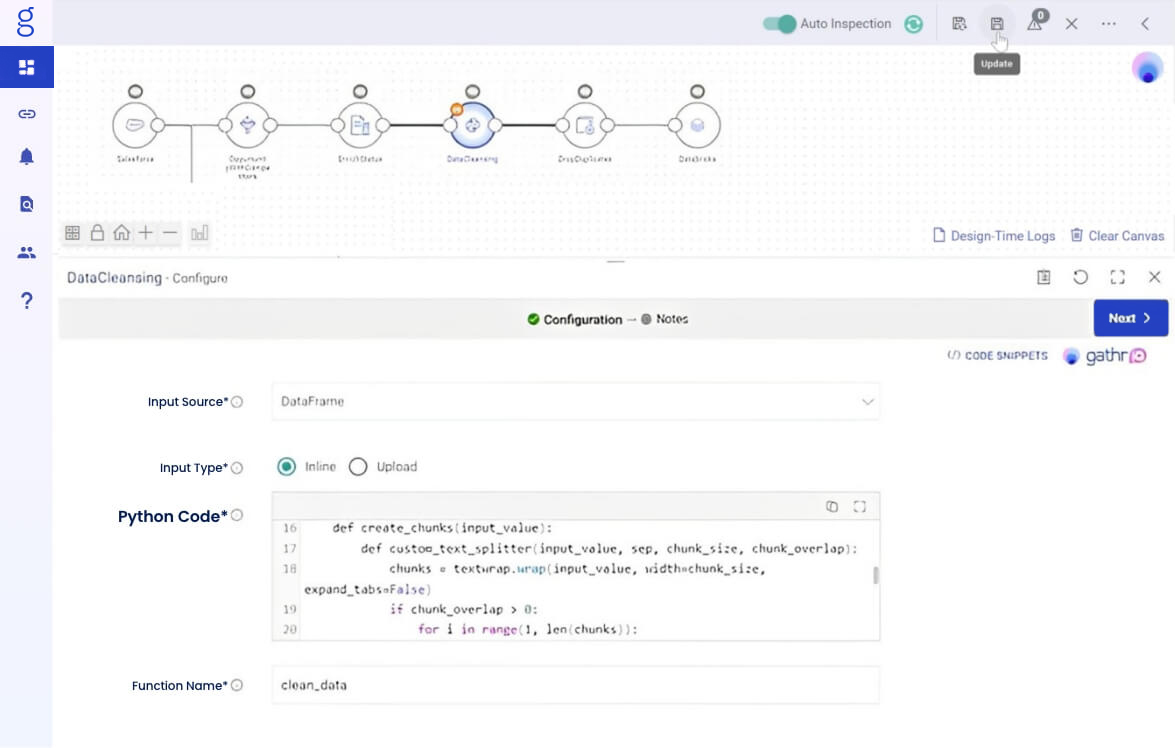

Combines flexibility of pyspark with no-code ETL

Reuse and blend existing python code with visual ETL.

Implement custom business logic at scale.

Gen AI assisted pyspark development.

Enterprise-ready Gen AI capabilities

GathrIQ copilot support throughout your data engineering, analytics & AI journey.

Traverse the entire data-to-outcome journey using natural language — build pipelines, discover data assets, transform data, create visualizations, and gain insights.

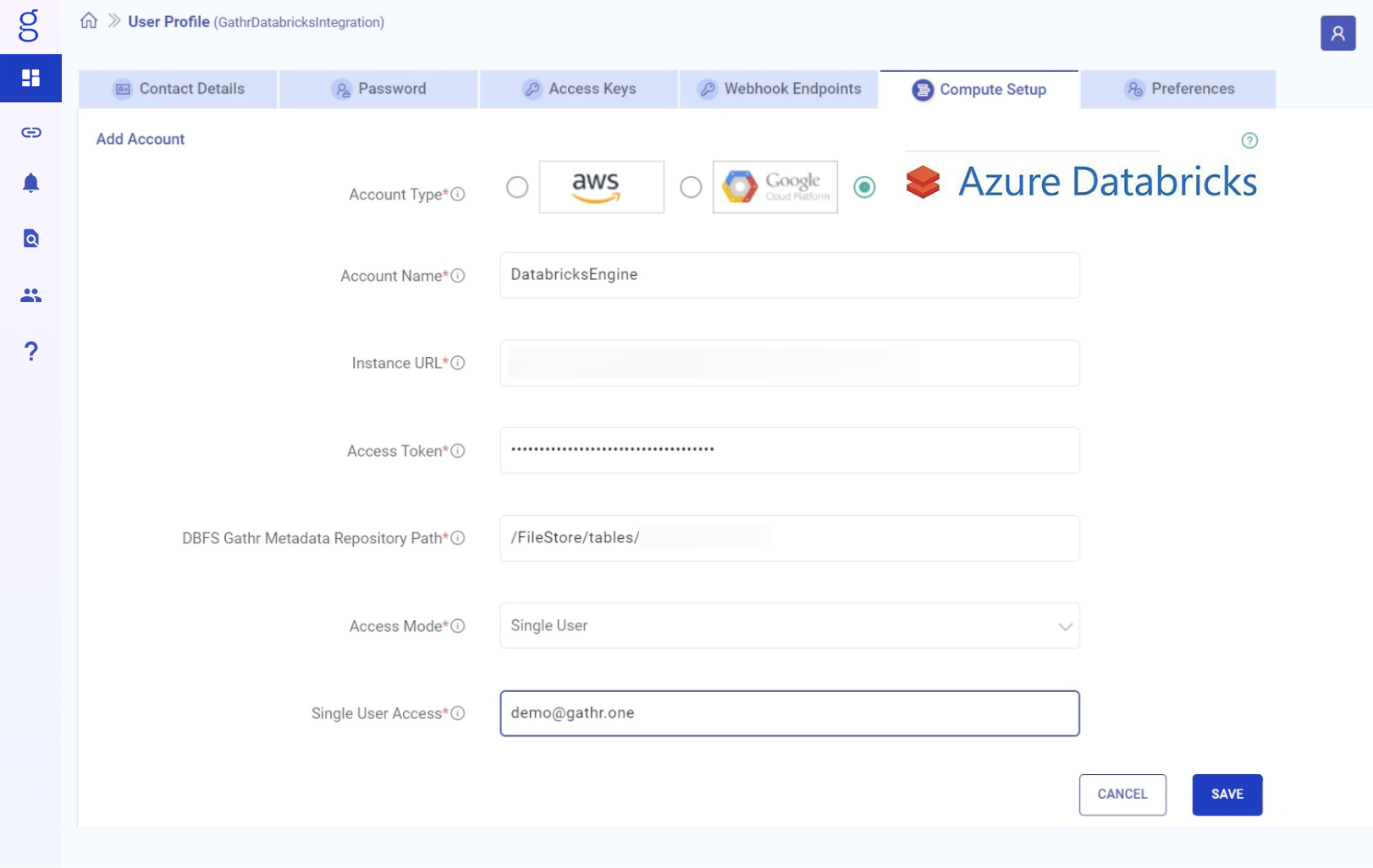

How it works