“Impetus has the opportunity to make StreamAnalytix (now known as Gathr) the de facto tooling standard for Spark and future streaming engines”- States the report.

Forrester has positioned Gathr as a strong performer among 13 most significant streaming analytics providers in The Forrester Wave™: Streaming Analytics, Q3 2017; one of the few, and perhaps the most comprehensively evaluated market insight reports focussed on streaming analytics today.

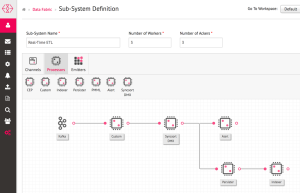

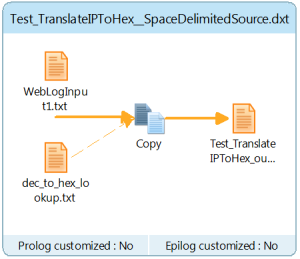

Gathr is a platform that makes creating real-time stream processing and machine learning applications on Apache Spark extremely easy. It is aimed at enterprises looking for a single visual platform that leverages popular open source, big data platforms for streaming ETL and advanced analytics, which is easy to use for both business and technical users.

What also shines about Gathr solution is that it includes enterprise-grade visual tooling for both development and deployment of streaming applications.

–An excerpt from The Forrester Wave™: Streaming Analytics, Q3 2017

Forrester’s evaluation of Gathr resonates with our key focus areas; the report’s vendor profile section highlights the following aspects of Gathr:

- Gathr offers use of both Apache Storm and Apache Spark and is architecturally positioned to support future use of other open-source streaming engines such as Apache Flink

- Gathr embeds EsperTech to provide advanced streaming analytics capabilities such as complex event processing

- Gathr tooling also unifies streaming and batch by supporting arbitrary Spark jobs such as machine learning

For more information on Gathr coverage in the Forrester Wave, read the full press release.